Transformers and Attention: The Backbone of LLMs

Table of Contents

Topic 1: Transformers and Attention – The Backbone of LLMs #

Welcome to the first deep dive in the series! In this topic, we’ll explore Transformers and Attention, two concepts that form the core of modern Large Language Models (LLMs) like Gemini and GPT. But before we dive into this, it’s important to have a basic understanding of Machine Learning and Deep Learning concepts. If you’re new to these topics, don’t worry! You can check out these excellent courses to build foundational knowledge.

Course Recommendations

- Machine Learning Specialization (Stanford & DeepLearning.AI) | Coursera - A beginner-friendly, 3-course program by AI visionary Andrew Ng for fundamental AI and ML concepts.

- DeepLearning.AI’s Deep Learning Specialization on Coursera – A comprehensive and Intermediate level- series to get you up to speed with the basics of deep learning with neural nets.

What is Language Modeling? #

Language modeling is the task of predicting the next word or sequence of words in a sentence based on the preceding context. It involves understanding the structure, grammar, and meaning of language, which enables a model to generate coherent and contextually appropriate text. Language models are trained on large corpora of text and learn the statistical relationships between words or tokens, which allows them to predict how likely a word is to appear next in a sequence.

The model generates text by considering previous words (or tokens) and using that context to generate the next word, phrase, or sentence. For example:

- Context: “The weather today is”

- Prediction: “sunny” (or any other word that fits based on the model’s training)

Language models are widely used in many NLP tasks, Text Generation and Summarization, Machine Translation etc. Traditional language models, like n-gram models, used simple statistical methods to predict the next word based on the preceding ones. However, modern deep learning-based language models use more powerful neural networks to learn more complex patterns and relationships within the language.

Before Transformers, models like Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks were used for sequence processing. RNNs and LSTMs processed text one token at a time, maintaining a memory of previous tokens. However, they struggled with long-range dependencies, as their memory faded over long sequences, making it difficult to capture relationships in complex texts.

Reading Recommendations #

- Excellent Blog for understanding LSTMs: https://colah.github.io/posts/2015-08-Understanding-LSTMs/

- Why LSTM/RNN Fail: https://towardsdatascience.com/the-fall-of-rnn-lstm-2d1594c74ce0

- Sequence to Sequence Models: Understanding Encoder-Decoder Sequence to Sequence Model | by Simeon Kostadinov | Towards Data Science

Research Papers #

The Transformer architecture solves this by utilizing attention mechanisms to enable parallel processing of data and capturing dependencies regardless of their distance in a sequence.

What are Transformers And Attention ? #

Transformers are the backbone of modern Natural Language Processing (NLP) and are the primary architecture behind LLMs. Introduced by Google in 2017 in the groundbreaking paper “Attention is All You Need” by Vaswani et al. (2017), Transformers revolutionized the way we process sequences of data, particularly for language modeling. The Transformer architecture was initially developed for translation tasks. It is a sequence-to-sequence model designed to convert sequences from one domain to another, such as translating French sentences into English.

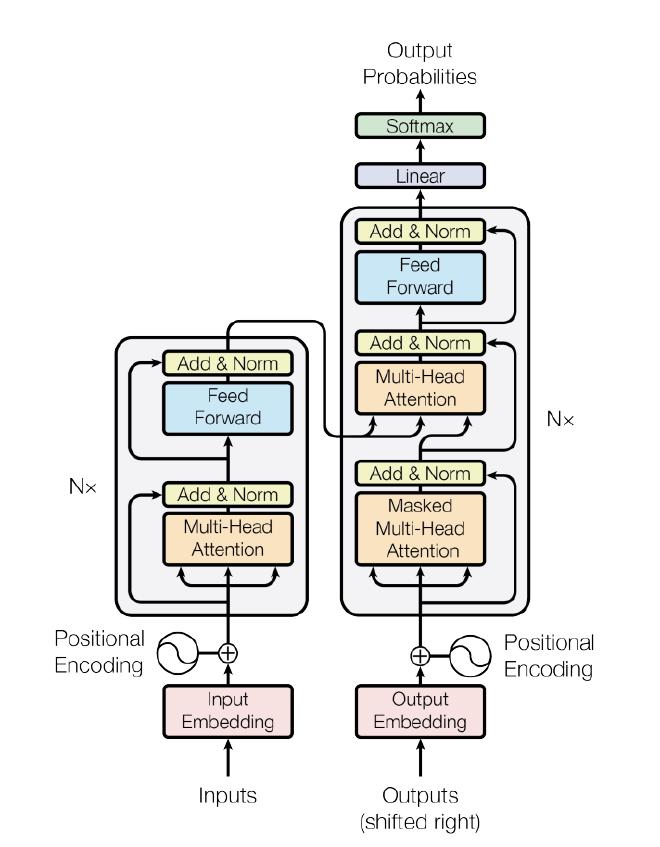

The original Transformer architecture (shown in Figure 1) consists of two main components: the encoder and the decoder. The encoder processes the input text (e.g., a French sentence) and transforms it into a numerical representation. This representation is then passed to the decoder, which generates the output text (e.g., an English translation) in an autoregressive manner, meaning one word is predicted at a time based on the previously generated words.

Don’t worry about the complex diagram above, and I don’t expect you to be familiar with terms like multi-head attention etc., just yet. :). I’ll break down the key components of the Transformer for you and share the best resources to explore them in depth.

These are the key components of a transformer model.

Input Embedding/Output Embedding:

- Input embeddings are used to represent the input tokens (e.g., words or subwords) provided to the model, while output embeddings represent the tokens predicted by the model. For instance, in a machine translation task, the input embeddings correspond to words in the source language, and the output embeddings correspond to words in the target language.

Positional Encoding:

- Since Transformers don’t process data sequentially (like RNNs), positional encoding is used to add information about the position of words in a sentence so that the model can understand the order of words.

Attention: This is the place where the magic happens !

- Attention allows a model to focus on the most relevant parts of the input while processing a sequence. This is crucial for understanding language because words can have relationships that extend over long distances. For example, in the sentence “The cat that chased the mouse was very fast”, attention helps the model understand that “cat” and “was” are related, even though they are far apart.

- The primary type of attention in Transformers is self-attention, which allows each word (or token) in a sequence to “attend” to all the other words in the sequence when making predictions. This capability enables the Transformer to capture contextual relationships at scale.

Figure 2 : One word “attends” to other words in the same sentence differently.

Ref: https://lilianweng.github.io/posts/2018-06-24-attention/ - Multi-Head Attention: Instead of having a single attention mechanism, multi-head attention uses multiple attention layers to learn different relationships in the data simultaneously. This allows the model to capture more diverse patterns.

- Masked Multi-Head Attention: We force the model to only calculate attention corresponding to only the previous tokens that it has seen in the sentence and not for the future tokens. Used in the Decoder part of the transformer.

Blogs explaining attention in detail:

Feed-Forward Layers:

- After the attention layer, a feed-forward network is applied to transform the representations for each token. This adds non-linearity and complexity to the model.

Add & Norm Layers :

- Every transformer layer consisting of a multi-head attention module and a feed-forward layer also employs layer normalization and residual connections. This is corresponding to the Add and Norm layer in Figure 1, where ‘Add’ corresponds to the residual connection and ‘Norm’ corresponds to layer normalization.

Output Layer:

- An Output Layer, such as the Softmax layer, produces the final predictions of the model,converting the computed values into probabilities for tasks like classification or generating text.

Reading Recommendations #

- I highly encourage this blog to anyone who wants to understand transformers and attention in the most simplified manner. It beautifully explains all the above concepts visually. The Illustrated Transformer – Jay Alammar

- Youtube Video for the same: https://www.youtube.com/watch?v=-QH8fRhqFHM

- Transformer Paper: [1706.03762] Attention Is All You Need

- Hugging Face’s Course on Transformers – Hands-on tutorials with practical implementation examples.

Other References/Resources #

- Residual Networks: [1512.03385] Deep Residual Learning for Image Recognition

- Layer Normalization: [1607.06450] Layer Normalization

Wrapping Up #

- Transformers revolutionized large language models (LLMs) and AI with the introduction of the self-attention mechanism, allowing models to effectively capture long-range dependencies and contextual relationships across entire sequences.

- Unlike RNNs and LSTMs, Transformers process data in parallel, significantly enhancing scalability and training speed.

- The architecture supports massive pretraining on diverse datasets, followed by fine-tuning for specific tasks, enabling powerful transfer learning capabilities.

- This scalability and flexibility have driven breakthroughs in performance, enabling the development of state-of-the-art LLMs like GPT and BERT.

- These models excel in a variety of tasks, including text generation, translation, and summarization.

I hope you enjoyed the article and found the insights on transformers and their impact on AI and large language models helpful. If you have any questions or would like to explore the topic further, feel free to reach out!

Happy Reading! :D